This is video of an excellent talk on the human connectome by neuroscientist Bobby Kasthuri of Argonne National Lab and the University of Chicago. (You can see me sitting on the floor in the corner :-)

The story below is for entertainment purposes only. No triggering of biologists is intended.

The Physicist and the Neuroscientist: A Tale of Two ConnectomesMore Bobby, with more hair.

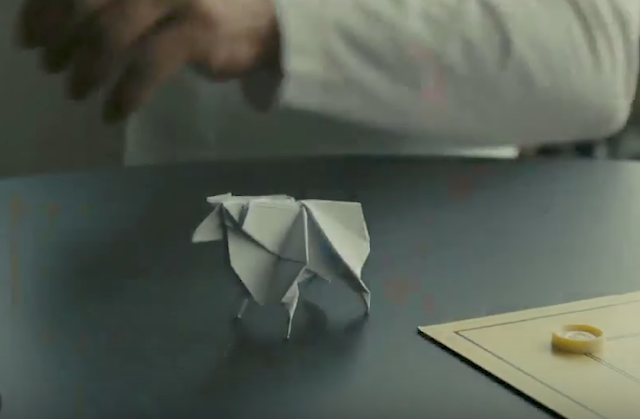

Steve burst into Bobby's lab, a small metal box under one arm. Startled, Bobby nearly knocked over his Zeiss electron microscope.

I've got it! shouted Steve. My former student at DeepBrain sent me one of their first AGI's. It's hot out of their 3D neuromorphic chip printer.

This is the thing that talks and understands quantum mechanics? asked Bobby.

Yes, if I just plug it in. He tapped the box -- This deep net has 10^10 connections! Within spitting distance of our brains, but much more efficient. They trained it in their virtual simulator world. Some of the algos are based on my polytope paper from last year. It not only knows QM, it understands what you mean by "How much is that doggie in the window?" :-)

Has anyone mapped the connections?

Sort of, I mean the strengths and topology are determined by the training and algos... It was all done virtually. Printed into spaghetti in this box.

We've got to scan it right away! My new rig can measure 10^5 connections per second!

What for? It's silicon spaghetti. It works how it works, but we created it! Specific connections... that's like collecting postage stamps.

No, but we need to UNDERSTAND HOW IT WORKS!

...

Why don't you just ask IT? thought Steve, as he left Bobby's lab.